From the agricultural revolution to the digital age, language served us well. Clever use of AI technologies can take us where language limitations cannot.

Riding Language from Agriculture to Enlightenment

Human advancement is tightly coupled with technology. First – we extended what evolution equipped us at a certain period with the use of tools. We used rocks, sticks, shells to expand what our muscles, nails and teeth could achieve. But we were not unique at that. Other monkeys and some birds and sea creatures also use tools. Where we separated from the crowd was when we managed to transfer how to make better tools from one generation to the other. To accumulate improvements over the years.

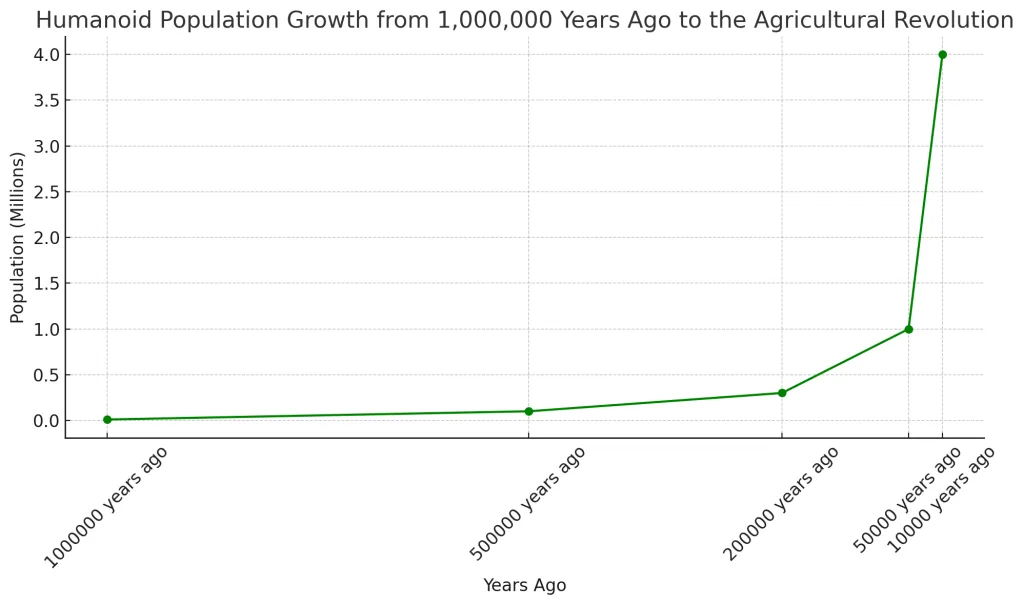

In order to do that – we needed better means of communication and the brain power to match. What is important is that for about a million years – we used rocks and sticks formed into shapes (“The Stone Age”) and only about 40,000 years ago – we started agriculture. “Surprisingly” enough – that is about the same time when modern language matured and more complex concepts were able to be transmitted. Abstract, future, conditions, metaphors and so on. Without them it would be difficult to explain to the brat standing next to you in the grass how selecting a specific type of seed would yield better crops next season.

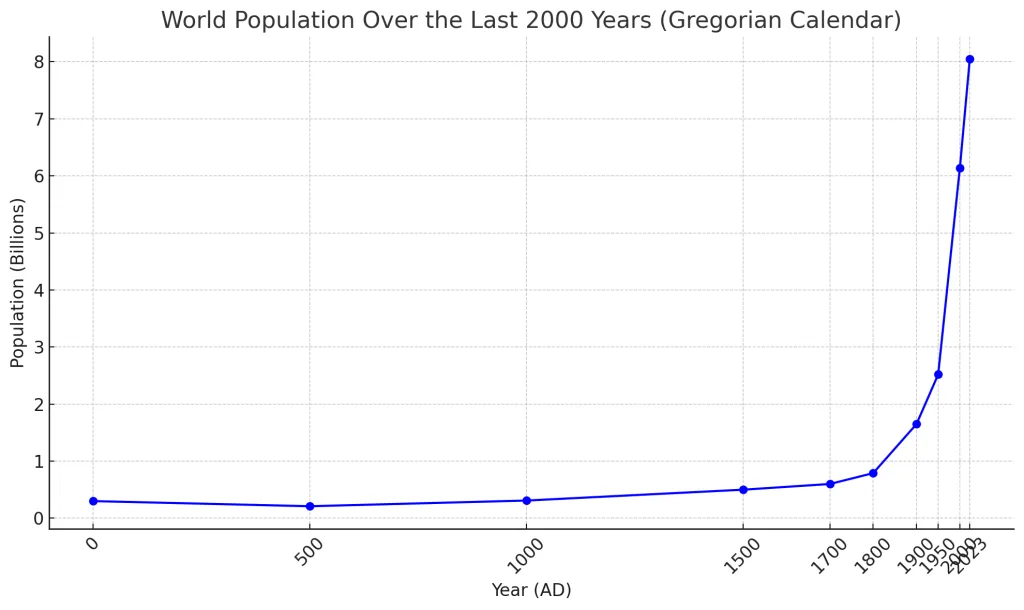

That worked pretty well until about the enlightenment era and the industrial revolution. During these times the seeds for rapid technological and thought innovation were putting humanity progress into hyper-speed. The simplest way to illustrate this is through population growth – a clear indicator of a species’ success.

During this period we invented things with a growing level of complexity – government, law, finance, medicine, science, mathematics, transportation, means of communication and most recently – computation. If up to the 1600s it was considered cool to be a Renaissance man – an architect, a doctor, a sculptor and a politician – that would be one doctor I would prefer not to visit today. This technological exponent is the culmination of our means of transferring thought and knowledge – language. Multiplied by the growing population – we got more clever people being able to invent and communicate – making more clever people and so on.

But around that time (the 1600s), people started to suspect something was broken with language. René Descartes and John Locke thought it was imprecise and subject to interpretation, Rudolf Carnap argued for more logic and Noam Chomsky recognized its limits in generating meaning. Claude Shannon fathered the digital age and the bandwidth-limits of communication in his Information Theory work, putting the suspicion on language as an inefficient means for transferring information. Modern cognitive science, AI, linguistics and philosophers are working and hoping modern technologies will enable a solution.

Why language reaching its end of life (300 years ago) severely affects our lives today and delays humanity is a subject for another article. We will also not discuss suggestions for improving word-based languages, compression, non-verbal mediums and fidelity. What follows is a look at the current state of AI and musings on whether freedom from the shackles of language can be glimpsed on the horizon.

Foundation Models and Anthills

When we chat with ChatGPT, Gemini or Claude (you guessed right, named after Claude Shannon mentioned above), we are “prompting” a Foundation Model. Each of these companies took a huge amount of text from everywhere it could put its hands on – every page of every site on the internet, books, articles, code – and let a gazillion computers run LLMs to form an entity that holds the relations between parts of words and words to each other.

It is a little hard to visualize so let’s try a metaphor.

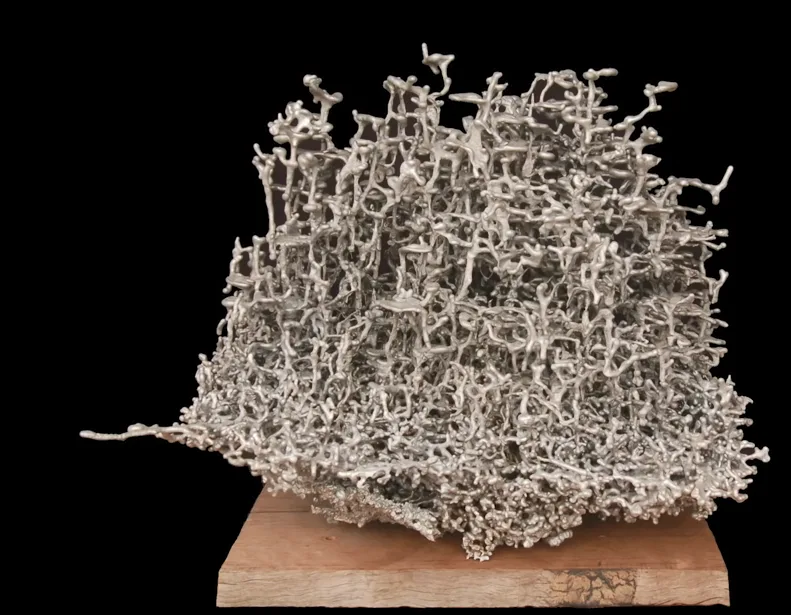

That of the somewhat niche art of anthill sculptures. Think of a foundation model like a vast, intricate anthill. Each tunnel and chamber represents a connection between words, built by algorithms instead of ants. The deeper and more intricate the anthill, the better the model understands the nuances of language and the relationships between concepts. Only with no ants vowing to revenge.

Now that the Model is ready – we can “Chat” with it – send a question (prompt) to it and it will return what it calculates to be the best way to “complete” (answer) it. The “deeper” and more “fine” the model is – the better the answer. And so – if we want to improve answers in a specific “obscure” area (“the gray hamster sleeping habits in eastern tibet”) we will need to feed the model with more – perhaps less publicly available – content about the subject. This is called “fine tuning” the model.

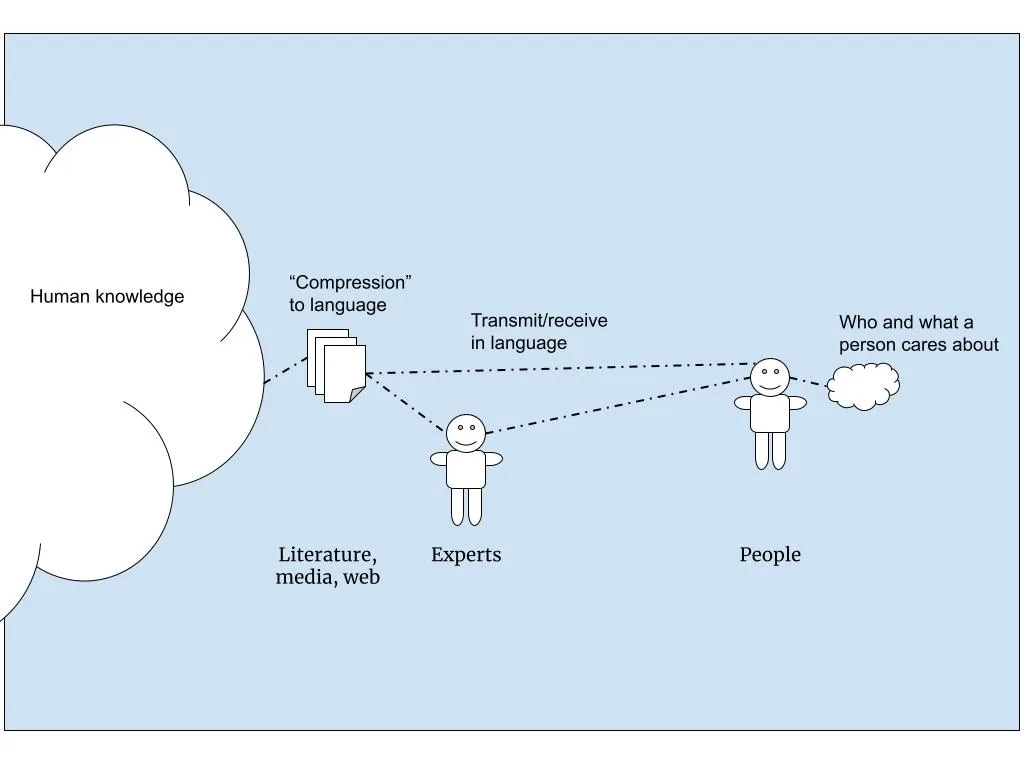

What is amazing about this is that the model actually represents human knowledge. At least that which is available in writing. This “knowledge” does not equal “truth” and it also evolves over time. So similar tactics such as what Google did with PageRank back in the day are utilized to improve the reliability of what the model returns. Giving more weight to credible and actual sources. We are at the early days of these models – so expect fine tuning to be less of a thing (as more “edge” content goes into the foundation models) and expect accuracy to go up (remember we used to get lots of scam redirect sites on Google?).

Communication Becomes Totally RAG

So where does communication come into all of this?

At first glance it seems GPT and the other foundation models are “better search engines” and save us time reading, understanding and writing. It surely saves a lot of time. But is that all?

Hopefully not.

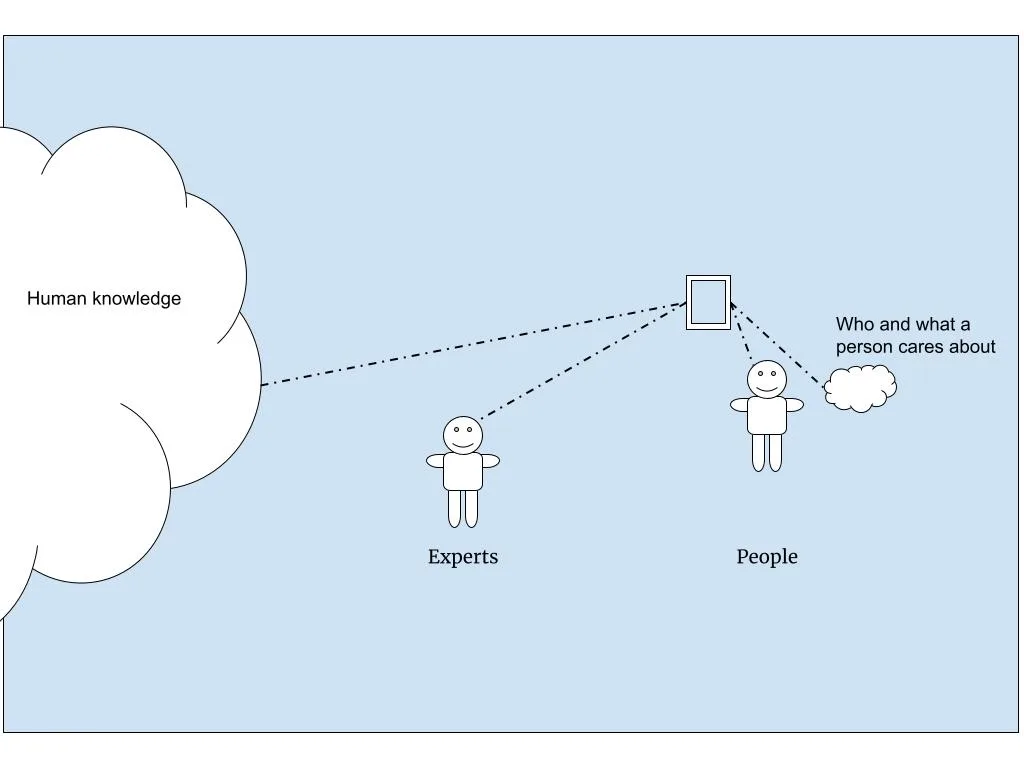

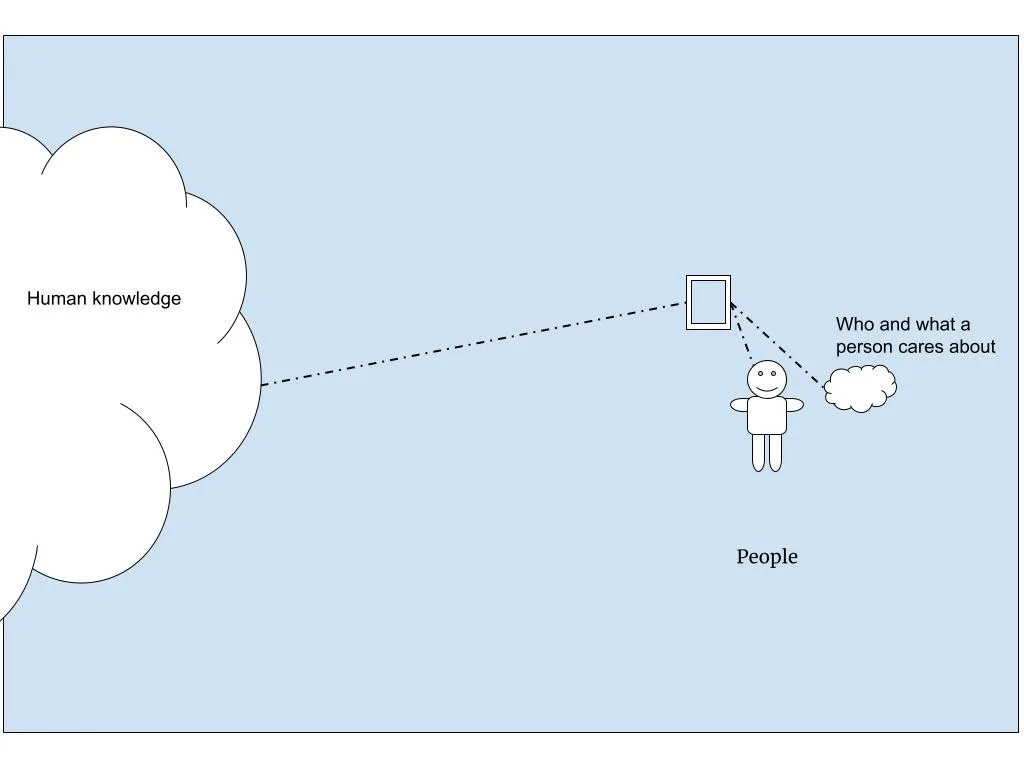

Broadband communication between people and Human Knowledge (I will drop the anthill metaphor from now on) as represented by the foundation models can (and should) bypass the limitations of language. If a constantly updating cloud of shiny, nuanced, layered and complex knowledge is out there – why must we reduce communicating with it to using the narrow, prone to errors, slow and outdated method of communication, language?

The first step should be Fact Checking

Imagine reading a news article and instantly knowing whether the claims are supported by evidence. When you see a story of a new magic-pill – how does it stand to credible research? What about the price you see on a product? Is it the best available?

That was the first step. Having the knowledge check the narrow thing communicated to you (in speech or text) and giving you the facts about it. A lens of truth over the world you interact with. Shazam for facts.

The second step is Subjectivity

When you interact with the world you come with context. Your subjective person, what you care about, your changing priorities and the people around you. This “Subject” is a complex array of information, weights and relations. The more taken into account when interacting with “the knowledge” – the better. New methods, such as RAG and its brother ARAG are a momentum in the right direction.

The third step is Agency

The way foundation models work today is that they do not work. I mean – they are lazy – they do not work for you. You need to tell them what you want in order to get anything from them. That too is limited by both you needing to know what to ask and the action of asking (in language). The solution is Agency – the knowledge taking initiative – based on what it knows you need (in the broad sense of it) and communicating or acting on your behalf.

This part is a little further down the technology road but not so far. Today’s GPTs appear to “know” how to write not-so-terrible code. Code can run on computers and computers are known to be useful agents, when coded to do so. With a little back and forth – the knowledge grows agency.

Are We At Science-Fiction Land Again?

Language as the sole means of communication has served us well. 40,000 years and ruling the planet is not bad. But it has been showing signs of weakness facing the challenges of modernity. For 300 years this has been apparent to thought leaders but remained a problem with no practical solution. Language limitations are also considered by many business and political leaders not a problem but rather an advantage. But these shackles on humanity might have a chance to being loosened.

The recent rise of the foundation models at the core of text generative AI have the ability to communicate the truth, address subjectivism and create agency. They require clever implementation but they are based on current and working technologies. This is not sci-fi. The only risk is that these technologies will “hit a wall” of quality (not find their PageRank), be too expensive to scale or be shackled by regulation. If so, these will only be delays. If we thought recent centuries showed rapid development and the state of humanity has never been better – there is hope acceleration is right around the corner.

Closing Words on Calculators and Lazy GPS Users

“Will having all the knowledge accessible not make us stop reading, learning, thinking and inventing?”

I think not.

Pocket calculators (and supercomputers) did not end mathematics and relying on GPS did not make us stop wandering around. Tech advancement frees us from doing mundane tasks. The only thing that changes is what we think of as mundane. Crafting words in clever ways to deliver a message used to be something to aspire to. But so was mending clothes and preserving food.

On the other hand – initiation, ideation, motivation, aspiration, inspiration – they are far from being replaced by technology.

This is also why we need not fear the rise of the AI-overlords. As mentioned above – the existing technology lacks agency let alone motivation. Or intelligence. It is simply a well-organized anthill of all the words.