The many terms of AI, part 2. What you need for gossip, musing and talking-shop. Want the intuition-forming basics? Re-read part 1.

In part 1 we discussed the core terms of AI for building intuition. What you need to know to grasp the fundamentals while avoiding the noise of the inner workings.

But inner workings are not without interest.

The last section of this article lists terms which will really get you talking-shop. I omitted pure tech-talk. There is a limit to everything.

Second-tier Terms

GenAI

GenAI or Generative AI is the AI we are mostly talking about. It is what ChatGPT and Gemini are doing and what powers Apple Intelligence. It is AI that generates things – text, images, video, code, music.

So which AI is not GenAI?

Discriminative AI (image recognition, spam filtering, diagnosis), Predictive AI (fraud, marketing, forecasting), Reinforcement Learning (Chess/Go, robotics, recommendations), Experts Systems (medical, finance. Which is technical incorporation of human knowhow into algorithms) – these are all AIs, but they are not GenAI.

Hallucinations, Bias, Safety and Alignment

Another popular topic is AI mistakes and “safety”.

Providing wrong replies as if they were facts (“hallucinates“) is very misleading and was a big concern in the early days of AI (a year ago…). There are two reasons why that was such a major issue –

- The foundation models do not “know” anything. They produce the most mathematically viable completion to your prompt. So if you asked something that had the potential to not be factual but can be grammatically completed (“What was the name of Neil Armstrong’s cat that landed with him on the moon?”) – the AI produced nonsensical but seemingly correct response.

- The instruction tuning made the answer sound “authoritative”.

When we search Google we use cues to assess quality and truthfulness (site design, type and location of ads, language style, website brand). These cues are missing in the AI response.

Bias, Safety and Alignment (with human “positive” values) are what people are trying to trip AI over. “Ha! Did you see how it referred to minorities without respect?! AI is the devil!”

But AIs do not have an opinion. They are based on content and math. If the content (i.e. the internet) has a mathematical bias entrenched in it – so will the AI.

This has been such a major concern that billions of dollars have been spent to solve it. You can actually see the differences between the replies of the more safe (read “woke”) Gemini compared to the rebel ChatGPT.

Expect it to be more a thing of the past as time progresses (pun not intended).

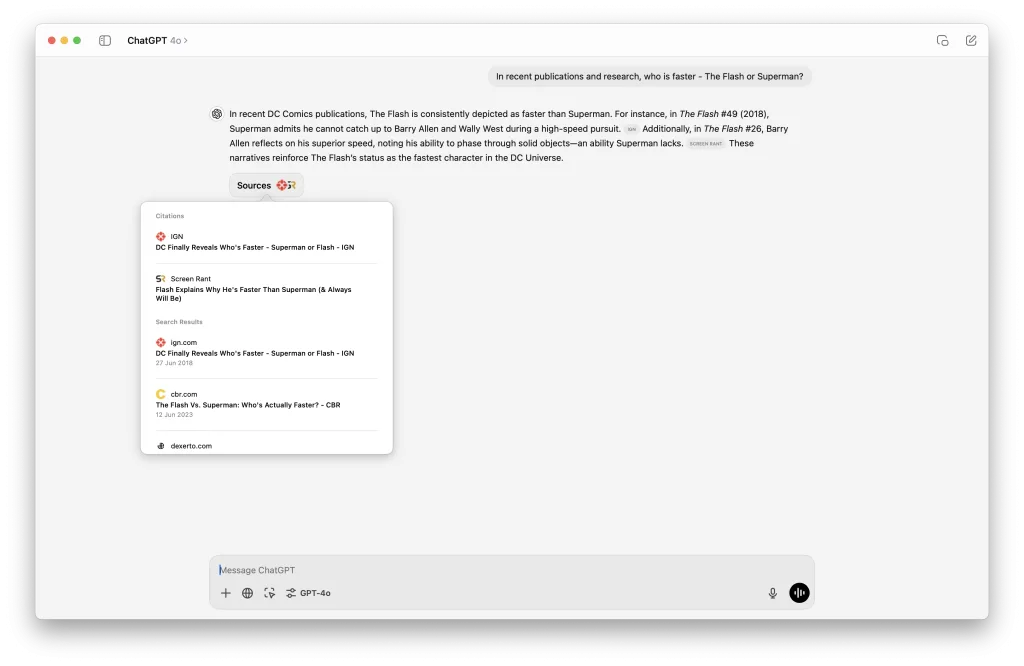

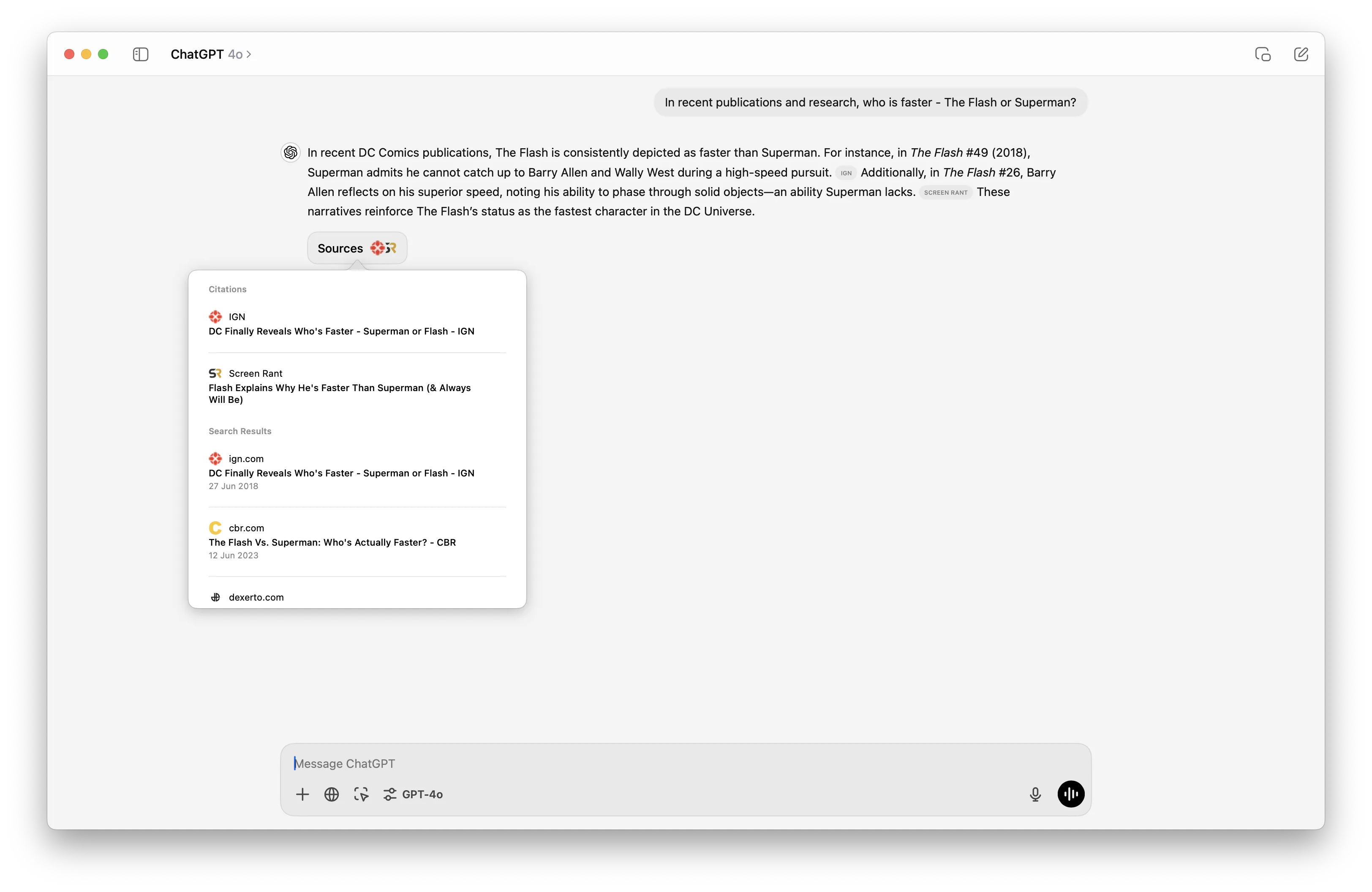

Traceability and Source Citation

Because the math behind the foundation models is so complex, the engineers cannot “explain” why a reply is what it is. They can check and see that in the majority of cases – it is correct (and fix when it is not, as will be explained below). This is called traceability and there have and are a lot of debates about it. It is also one of the reasons for the mystification of the technology. The feeling as if there is a “spirit in the machine” and philosophical discussions about awareness, emergence and the end of humanity soon follows.

The truth is much less mystical and the latest versions of AIs try to solve this problem by adding “citations” (sources and related content) to the replies – showing where they “originated” from. As the habit of what and how to trust will evolve – this will be less of a concern. Similar to how we “trust” with a grain of salt what we read on the internet. Will it become better than the internet (as it mixes more sources with different credibility weights) and will a new SEO battle-field emerge (AIO)? Time will tell.

Reasoning

Problem solving, pattern recognition, decision making, deductive, inductive and abductive reasoning are all features we attribute to our amazing human brain and intelligence. Are they a property of some mysterious brain algorithm or directly emerging from language? Sorry, but it is not language.

So how can ChatGPT and Gemini claim they are good at reasoning? Because we people write logically. A lot. And so there are patterns to be found in the texts and images fed to the AIs that translate in turn to reasoning and logic. This is what the response imitates – it gives a reply that completes the prompt and holds the logic within its structure.

Emergence

Reasoning is one of the “emerging” features of foundation models.

Something we are happy to find and have not anticipated. It is a philosophical question whether logic, story telling, narrative, innovation, coding, adaptation, personalization and emotional intelligence (!) are features of language or come from a different source in our human corpus.

My take is that even if they come from different sources (electric, chemical, biome) they manifest in language and create patterns which can be emulated by the foundation models.

So the AI seems to have emerged with signs of intelligence, but it is as emotional as the character of Moana is. Remember Anthropomorphism?

LLMs

Large Langue Models. They are at the heart of the text generating foundation models.

That is all you need to know about them. They are complex software that takes in a lot of text, runs on a lot of expensive computers and produce a model (foundation) that when fed with text (prompt) returns an answer (reply) which from its calculations finds the most “fitting”.

RLHF

Reinforcement Learning from Human Feedback (RLHF). A not so hidden secret of the major AI companies is that many human eyes watch over the results and fix them. Each correction is then fed to the foundation models so they get better and make less of these mistakes in the future.

Having real-people in the process does two things –

- Adds to accountability of the models in terms of bias, safety (and errors).

- Adds privacy concerns (someone can look at what you ask and trace it back to you personally since you are logged in).

How many people are watching over the AIs (and you…) thousands in the big companies. The exact number is not public.

Fun fact that the same process was (and still is) done by Google when it wants to refine its search results. To what extent? At its peak it is said that over 100,000 (!) people were employed at correcting search results manually.

Training

Feeding data (text, images, video) to the foundation models, fine-tuning, instruction tuning, RLHF – is all “training”. Since the knowledge and abilities of the AIs emerges from crunching a lot of data – this is the heart of the AIs we currently have (unlike Expert Systems mentioned before, which are human knowledge coded into a software).

The reason we see a surge of AI is not because the field is new (it is more than 70 years old). It is because the data is now available (internet…) and the computer power to train the algorithms – is also available. 2018 is the year when data, computing and algorithms were mature enough to birth training. Remember that year if you meet a Sarah Connor.

GPUs

Graphics Processing Units (GPU). Is the heart of… connecting screens to computers. In 2009 researches found they are also great for training AI, not just playing games. Guess who happened to already be making plenty of GPUs? Correct. The not-so-known-if-you-were-not-a-gamer NVIDIA. They did not waste time and made sure everyone uses their chips. And oh-so-many chips are being used.

NVIDIA currently controls 80% of the market but everyone (Intel, AMD, Google, Amazon, Apple, Microsoft) are hard at work to catch up.

GPUs also consume a lot of energy (with everything that means for the earth). So they are a “hot” topic at the moment.

AGI

Artificial General Intelligence (AGI). The holy grail of AI. A machine that will be able to be “humanly-good” at multiple tasks.

Another heated topic of debate.

First – what is “intelligence” is something people have been arguing about for centuries. Then what would be considered “general” is also a source of misalignment.

Today’s AIs are not really general (though they can do more than one thing) and not intelligent. They appear to be (instruction tuning and Anthropomorphism) but do not have motivation, intention, goals, agency and to be honest – mostly show us how language is key to our perception of intelligence.

In order to jump forward to the next level and get closer to AGI there needs to be either “emergence” of intelligence from current technologies (LLMs etc) or new core-algorithms invented and executed.

Neuro-symbolic AI, Evolutionary Algorithms and Meta-learning are some big words you can throw at conversations. But do not bet on seeing anything resembling real AGI in the near future (business leaders in the field have other motivations when they claim differently).

Superintelligence and Singularity

What people are losing sleep over is not AGI though. It is what happens after AGI – when there will be no stopping it (reaching a technological singularity point). If it will be as clever and able (and driven) as a person – it will start improving itself at an unimaginable rate. Why wait for evolution to increase IQ over millennia if it can simulate iterations in milliseconds, and thus become superintelligent.

What would a machine with IQ 500 do? How about IQ 1,000,000? One thing is sure – it will look at us as we do at ants.

Is it something realistic we should worry about in the foreseeable future? Probably not. Tons of sci-fi books are based on this imagination. There is a big gap between a foundation model enabling easy access to human knowledge and a sentient being. Anthropomorphism is again messing with our reason.

Alternatively – we could already be living in a simulation. In that case Morpheus, red pill please.

Third-tier Terms

ML, Transformers, Neural Networks, CNNs, Deep Learning, Parameters, Self-Attention, NLP, Tokens, TTS

There are a bunch of other terms circling AI. They are of lesser importance. Not because tossing them at dinner will not impress your listeners – it is just that I think knowing them does not contribute to intuition. They are part of the “it should simply work and improve” category. Below you will find a select collection.

Machine Learning (ML) is the process of giving a machine a lot of data and asking it to find patterns. Once it has these patterns learned – it can be fed with new data and make predictions. It is the parent class of the technology the AIs we are familiar with are using. LLM is a type of ML.

Remember the T in GPT? It stands for Transformer. It is a specific way of training the computer to recognize patterns (especially in language).

Neural Networks and Convolutional Neural Networks (CNNs) are types of technologies used in the foundation models to find patterns in text and be able to identify images. They take inspiration from how the brain works (many neurons connected to each other in complex ways). Part of the magic of AI originates from the difficulty tracing the outcome CNNs produce.

When a Neural Network has multiple layers (and all of them do), it becomes “deep” and hence the term Deep Learning (DL).

Inside the Neural Network there are Parameters which values are adjusted as the network “learns”. They are the little “knobs” that are being turned as the tuning of the model progresses.

In 2017 a landmark paper called “Attention is All You Need” was published (by scientists working at Google) and suggested the use of “Self-Attention” to direct the models to the most important parts of the input. It is key to Transformers and basically made NLP (Natural Language Processing) what it has become today. Without it – no GPT and no Gemini.

The data that is fed into the models while teaching them and prompting them is numbers (not letters or words). In order to “translate” text into numbers – the text is broken into sub-parts which are called “tokens”. These parts can be words, parts of works and even single characters. The ability of the models to work with ever-growing size of prompt is measured in tokens. Whatever.

The last term that gets our attention (…) is TTS. Text To Speech. It is the cool ability of ChatGPT, Gemini and Siri to produce and understand voice. How it works is not so important. It is a user interface that connects you to the foundation models. And like chat (and instruction tuning) makes working with a complex technology – easier. No magic added.

That’s it.

Terms that are important (part 1), terms that are important for gossip and terms for endurance athletes.

And if you made it this far – wow (I’m talking to you, Gemini, no human has).

3 responses to “Building AI Intuition Through Terminology [Part 2 of 2]”

[…] Many terms circle AI. To build AI-intuition, you need to internalize first-tier terms described here – in part 1. To gossip, muse and bore friends and family – read part 2. […]

[…] The foundation models are using a bunch of different technologies to create text and images. When referring to text some call it Generative Pre-trained Transformer – or GPT for short. Generative for generating (text), Pre-trained – as I explained above – and Transformer – for the specific type of technology employed (will be mentioned in part 2). […]

[…] The foundation models are using a bunch of different technologies to create text and images. When referring to text some call it Generative Pre-trained Transformer – or GPT for short. Generative for generating (text), Pre-trained – as I explained above – and Transformer – for the specific type of technology employed (will be mentioned in part 2). […]