Why language is a bottleneck for intelligence, collaboration and progress

---

An apology: This is a long and rather dense article. As those who spoke to me in person in the past years know, language and its brokenness are an obsession of mine. This article is an attempt to put some of the understanding I gathered into words. Using my nemesis, language.

If you choose the red pill and read it till the end there is only one thing I can offer you. The truth.

---

In 2003, philosopher Nick Bostrom published a now-famous paper suggesting that The Matrix might not be fiction but a plausible model of reality. His argument wasn’t mystical, it was probabilistic. Given the pace of technological progress, he reasoned, advanced civilizations could easily simulate countless virtual worlds, making it increasingly likely that we are already simulated. Elon Musk, never shy about extrapolation, took it further. He claimed the probability we’re in base reality, the original non-simulated universe, is “one in billions.”

Whether that’s true or not is beyond this article’s scope. But there’s another “Matrix” we are definitely living in. One that shapes our thoughts, limits our progress and quietly governs our civilization.

It’s called human language.

This is not an academic paper. There are no experiments cited, no formal models proposed. But it’s also not a philosophical musing either. I’ll reference studies, trace logic and apply inference. As you’ll see, this is not a subtle or niche concern. It’s an Everest-in-the-room. So big we’ve stopped seeing it.

It is, though, a personal article.

Language has been “haunting” me for years. I (still) do not have the right mix of holy-water, silver bullets and wooden spades, but I can point at the root of evil.

Let’s start.

Humans are a technology-oriented species. Since sticks and stones, we’ve embraced our tech revolutions. But unlike steam, electricity, digitization, or networks, the current wave of AI enthusiasm is deeply entangled with language. At this point in history, given our scale and reliance on an outdated medium, we’re heading into a perfect storm.

Language is our civilization’s most dangerous fallacy.

Why do we need language in the first place

Language, at its origin, was one of evolution’s most brilliant creations.

Knowledge, or more precisely, information, is stored in our minds in a deeply individualized way. Not just in our minds, as we’ll explore later, and not just in us. What each of us “knows” is shaped by experience, wiring and context. Take a simple word like dog. My wife and I might both say “dog” and agree we’re referring to the same thing. But our internal representations are different: shaped by the dogs we encountered, the state of our brains on those occasions, cultural signals, emotions, even genetics.

When we both look at the same animal, we share a similar perception (which is actually philosophically debated) but the way the concept is wired into our respective brains is not the same. Information in the brain isn’t stored like files on a hard drive. It’s shaped through overlapping, adaptive neural networks formed by years of experience. Each of us starts from a completely different baseline. Shared words refer to vastly different internal maps.

Actually, when we referred to neural networks, we were extremely simplifying. The genius solution of language is staggering.

Limited Scope, Unfathomable Potential

Neurons and synapses are just the beginning. The true scope of our cognitive machinery is far larger and blurrier than we’ve long assumed. The human brain houses about 86 billion neurons, 85 billion glial cells and 100 trillion synapses. Each synapse isn’t just an on/off switch. It operates across multiple dimensions: firing frequency, signal amplitude, synaptic plasticity. Estimates suggest a single synapse can exist in 26 distinct configurations. And that doesn’t even include glial cells, whose computational role in the brain is only now being uncovered.

That’s just the brain. We now know that memory (information) and cognition stretch far outside the skull.

Information is distributed throughout the body: in our “second brain”, the enteric nervous system of the gut, where our “gut feeling” comes from; in somatic tissues (on the cellular level) where motor learning (and trauma) are stored; in the immune system, which maintains a memory of pathogens and communicates with the brain; even in the very architecture of muscle memory.

Now mix in the chemistry: neurochemical modulation allows for over 10,000 distinct contextual states by some estimates, controlling how we think and respond. And beneath it lies the genetic and epigenetic encoding. A kind of molecular firmware shaping the long-term and cross-generational landscape of cognition. But cognition and knowledge are not stored just in “our” body.

The microbiome plays a major role here as well. Tens of trillions of microbial agents in the gut, our skin and within every cell inside and around our ‘body’, play a central role in shaping our moods, cognition and actions. This interplay with the external environment is captured in emerging frameworks like the exposome (the sum of all exposures across a lifetime), bioecological models (which treat humans as embedded in layered ecosystems) and neuroecology (which studies how environments shape the brain directly).

In other words, reality isn’t processed solely in the brain. We have a massively parallel, ecologically embedded system of perception. Which makes direct knowledge transfer between two people effectively impossible. We can’t project a synaptic map from one person into another. Let alone ecological maps. The differences are beyond comparison. We need an agreed interface. One that encodes, compresses and reconstructs meaning in a different mind.

That interface is language.

Language allows parties to sync. These parties do not have to be people. They don’t even need to belong to the same species. Trees and fungi, bacteria and humans, all sync using different forms of language.

Excellent for Collaboration, Terrible for Knowledge

Humans, a highly social and industrious species, evolved a sophisticated language system. Optimized not for data transmission but for coordination, bonding and shared attention. Let me repeat that: Not for data transmission. Human language is lean, adaptive and full of redundancy to handle noise in the channel. We often understand each other despite broken syntax, ambiguity and noise. Let’s take a few examples to illustrate:

“There is food on that hill after rain”

“Do not eat the mushroom with the white spots”

“Fred is not to be trusted alone with your wife”

“We should group with the Joneses to hunt for antelope”

So many ambiguities (food, that, after, mushroom, with, Fred, trusted, wife, group, antelope) and still perfectly clear. But we are not here to discuss how wonderful human language is.

Let’s dive into some technicalities. As a transmission protocol, human speech can encode sound at about 40 bits per second, a number drawn from Claude Shannon’s foundational work in information theory. But that’s a raw audio channel. In terms of real language, where meaningful, novel information is required to be transferred, what some studies call “non-redundant semantic payload”, the effective rate is far lower. Estimates from Jaeger, Levy and others suggest human language conveys around 1 to 5 bits per second of actual new content in real-world scenarios.

That’s the low end. To estimate the high end, imagine a concentrated, well-prepared expert, delivering a university course. Let’s say a course in Organic Chemistry. A dense textbook on the subject holds roughly 5 megabytes of highly structured knowledge. The course can be conveyed in 30 hours of lectures. That’s 40 million bits over 100,000 seconds. That gives us a generous upper limit: 400 bits per second.

Here’s our working range: 1 to 400 bits per second. In most real-world conditions, it hovers well below 100.

It doesn’t need to be exact. You just need to keep it in mind when you see what comes next.

Simulation is Safer Than Experimentation

Let’s go back to the storage side of the equation, the brain and its counterparts.

The exact number is debated. A research group at the Salk Institute estimated the human brain’s storage capacity at around 8 petabits (8 × 10¹⁵ bits) in 2015. Let’s write this number on an imaginary back of an envelope. Now let’s move to the rest of the cognitive system we’ve described. We need to estimate the capacity of the body’s other information-bearing systems: the enteric nervous system, immune memory, motor circuits, somatic storage, microbiome, chemical states etc. The jury is still out on how much each can store, but if we add a conservative estimate to our back-of-envelope calculator, we land in the vicinity of 109 petabits. That’s 1.09 × 10¹⁷ bits.

To put that into perspective, imagine you try to share that with someone. To describe not just the thoughts you’re conscious of, but also the subtle states, the sensory weight, the full context. And imagine they’re actually listening. No interruptions, you find the right words instantly and there are no misunderstandings. Even if so – you should have started talking around when the earth was formed.

That’s the scale of mismatch between what we hold and what we can tell.

But evolution is anything but inefficient. So where’s the catch? Probably in the fact that most of what our ecosystem stores isn’t meant to be shared. It’s used for operations.

Here’s a breakdown (the numbers are taken from various sources and are estimated):

- Autonomic and operational information, like regulating your heartbeat, temperature and balance, accounts for 60%-70% of brain activity.

- Procedural and implicit knowledge, like playing the piano, riding a bike, being creative or an expert, takes another 20%-30%.

- Explicit, conscious content, the stuff you can describe in language, is only about 5%-10%.

That (assuming 7% explicit share) brings us down to a mere 250 million years to explain what we consciously have in mind.

Still makes no sense.

We need to add another layer of filtering. The fact we have access (conscious, explicit) to a very large storage of knowledge does not mean it is relevant or meant to be communicated. It is used for modeling the world. It’s a predictive engine, simulating time, behavior and outcomes.

These abilities are exactly why it makes sense for our brain and supportive systems to take so much energy and be such a factor in our evolutionary success. Simulation is safer and more efficient than experimentation.

The Simulation Explosion

The ability to create models has compounding effects. Humans can build models of models. And models of models of models.

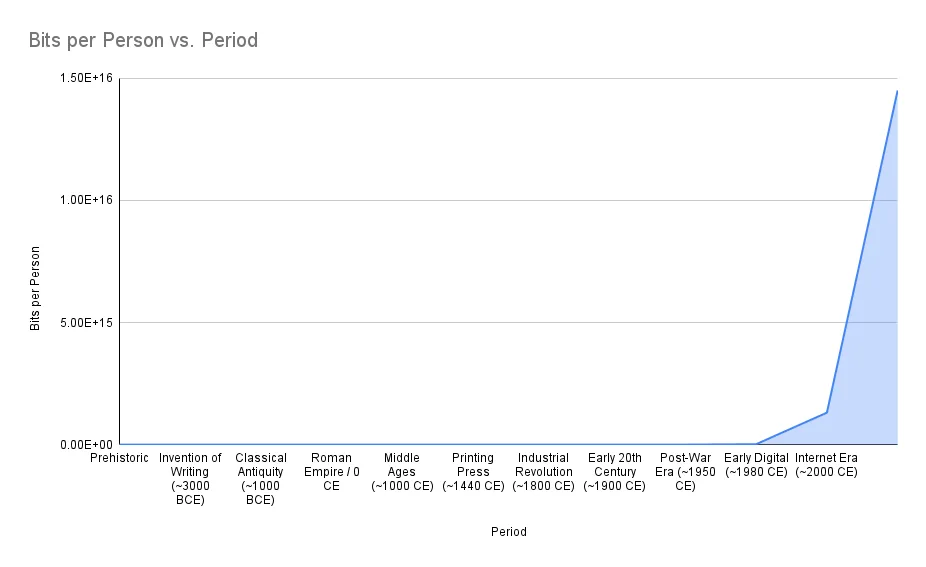

The creation of external, out-of-body, information storage systems and the growing density of human populations, sent this trajectory through the roof.

While this proved beneficial in terms of population growth, it happened in less than a blink of an eye on an evolutionary clock. The mechanism we use to transfer knowledge, language, did not have time to catch up.

In ancient times, transfer rates between 1 and 100 bits per second were sufficient. There wasn’t much explicit knowledge that needed to be transferred and most learning could happen through immersion and observation. “Here’s how you throw a spear, kiddo.”

In modern times, that gap has become a major inefficiency.

Take Organic Chemistry from before. The subject contains megabytes of structured information. It requires both implicit and explicit assimilation in order to be applied. Storing it in the human system is not the problem, there’s plenty of storage space. But acquiring it takes years. Between 700 and 4,000 hours, depending on the required level of mastery.

We’ve increased our capacity to simulate the world, but not our capacity to transmit those simulations.

And that evolved into a major problem.

The Most Extreme Case of Inefficiency

Let’s repeat this. The information can easily reside in the brain/ecosystem, but when we need to transfer it to another person, since our means of transfer is language, it can take years(!) to complete. For comparison, the USB port in your computer can transfer the Organic Chemistry course in 4 milliseconds. That’s just for explicit knowledge. Implicit knowledge (becoming a trained expert at something), which is often much more valuable, is beyond the reach of language altogether.

What are the implications of this extreme case of inefficiency? Plenty. But the problem is not just inefficiency. The medium (language) and its slowness make the process vulnerable to errors and ‘hacking’, also known as marketing, education and politics.

AI Powered by Pedals

Top-of-their-field thinkers, inventors, and even linguists have recognized the problem of language and tried to solve it for centuries. They failed, time and again.

In recent years, however, in a way similar to planes that took flight without flapping wings, we invented a “brain” able to contain knowledge without being organic. Explicit knowledge is now handled by LLM-based AIs, and non-LLM systems may soon acquire implicit knowledge such as riding a bike, driving a car, or playing the piano.

This is a big deal because it means we no longer need to rely on the accumulation of knowledge in people, its translation into language, and its transfer to others. We can keep feeding a central “reservoir” with new knowledge and abilities as they emerge, then duplicate and retrieve from it whenever needed.

But, and this is a big but, we still have to connect ourselves to this hub through language. We may no longer depend on verbal/illustrated transfer between individuals, but to “download” information into our brains and use it, we remain tied to good-old, extremely inefficient language. Current AIs are airplanes powered by pedals.

The solution? Reliance on machines.

Let them communicate M2M (machine-to-machine) at “USB” speed, not wait years for hack-prone language. How humans will interface with these machines is still open ground for invention.

---

Actually, I’ve been wetting my feet in that innovation. And by feet, I mean all the way up to my neck. But on that, later.

One response to “Language: Our Worst Inefficiency”

[…] ←Previous […]